Multimodal AI: How Visual Search, AR & Voice Are Merging the Shopping Experience

Multimodal AI in retail is transforming how consumers discover, evaluate, and purchase products by blending vision, speech, and interaction into a seamless, intuitive journey.

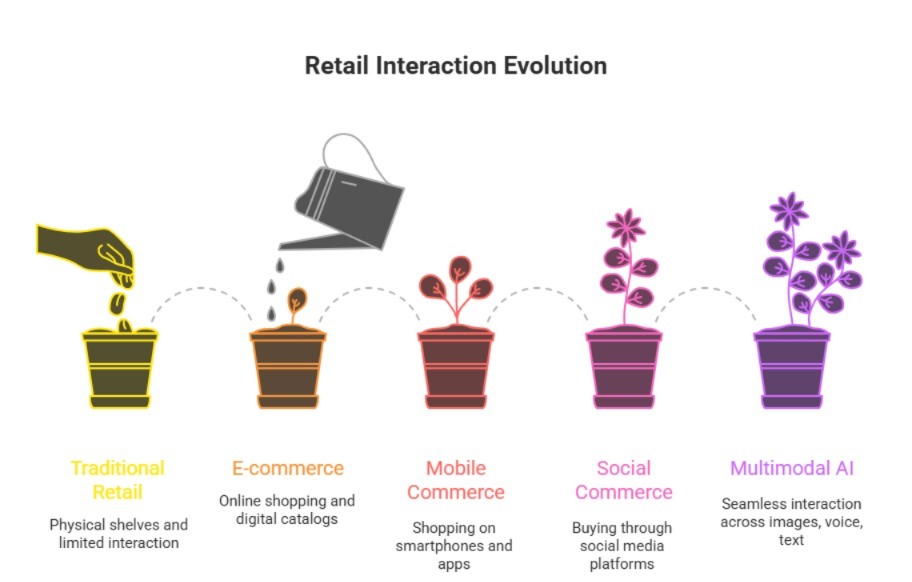

The Evolution of Retail Interaction

Retail has continuously adapted to how people shop from physical shelves to e-commerce, mobile apps, and social commerce. Today, another major shift is underway: multimodal AI, where systems understand and respond across images, voice, text, and spatial environments.

Rather than relying on clicks or keywords alone, shoppers can now speak, scan, and see their way through the buying journey. This convergence of visual search, augmented reality (AR), and voice AI is redefining how brands connect with consumers and will be a central theme at the NexGen Retail Summit 2026, where leaders explore the future of experience-led commerce.

Where Digital Shopping Stands Today

Modern retail already uses AI-powered recommendations, chatbots, and personalization engines. Visual discovery through social platforms, voice assistants for reordering, and AR try-ons are increasingly common.

According to Google, more than 50% of shoppers now say visual information is more important than text when making purchase decisions. Yet many retail experiences remain fragmented voice, visual, and in-store journeys often operate in silos. The next step is integration, where AI understands context across multiple inputs simultaneously.

What Makes Multimodal AI Different

Multimodal AI in retail enables systems to process multiple types of data at once combining what a shopper sees, says, and does to deliver richer, more human-like interactions.

For example, a customer can photograph a product, ask a voice assistant for alternatives, and instantly view an AR overlay showing fit, size, or placement all within a single journey. As defined by leading AI researchers, multimodal systems move beyond single-input intelligence to contextual understanding that mirrors real-world decision-making.

Microsoft and Meta highlight multimodal AI as foundational to immersive commerce, enabling retail environments that respond dynamically to shopper intent rather than static commands.

Where Multimodal AI Is Already Making an Impact

Visual Search & Discovery

Shoppers can search by image rather than keywords, discovering products through photos, screenshots, or social content. Retailers report higher conversion rates as visual search removes friction from product discovery.

Augmented Reality Try-Ons

AR enables customers to visualize products from makeup and eyewear to furniture and fashion in real-world environments. This reduces returns, boosts confidence, and bridges the gap between online and physical retail.

Voice-Enabled Commerce

Voice assistants allow shoppers to search, compare, and reorder products hands-free. When combined with visual interfaces, voice becomes a powerful layer that simplifies navigation and decision-making.

In-Store Immersive Experiences

Multimodal AI powers smart mirrors, interactive displays, and AI-assisted sales tools that adapt in real time to customer behavior, foot traffic, and preferences.

Together, these use cases demonstrate how AI-powered retail innovation is shifting from transactional shopping to experiential engagement.

Enablers and Ethical Considerations

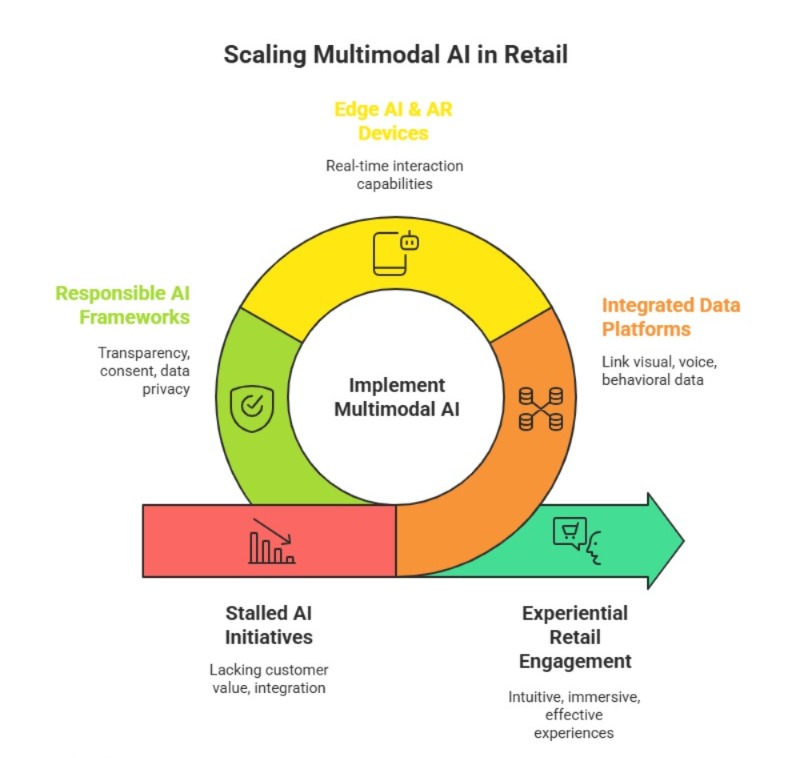

To scale multimodal AI effectively, retailers must invest in:

- Integrated data platforms linking visual, voice, and behavioral insights

- Edge AI and AR-capable devices for real-time interaction

- Responsible AI frameworks to ensure transparency, consent, and data privacy

However, challenges remain. Gartner notes that immersive AI initiatives can stall without clear customer value and cross-channel integration. Retailers must balance innovation with trust, ensuring AI enhances rather than overwhelms the shopping experience.

Preparing for the Multimodal Future

Retailers can begin their multimodal AI journey by:

- Auditing customer touchpoints to identify friction

- Piloting visual search or AR in high-impact categories

- Training teams to design AI-driven experiences, not just tools

- Ensuring ethical data usage and clear customer opt-in

- Partnering with technology ecosystems to scale safely

Multimodal AI represents a defining shift in retail one where seeing, speaking, and experiencing converge into a single, intelligent journey. By meeting customers in the way they naturally interact with the world, retailers can create experiences that are more intuitive, immersive, and effective.

At TechTrek Events, we bring together industry leaders to explore how emerging technologies are reshaping commerce.

The NexGen Retail Summit provides a platform for these conversations, where retailers, brands, and innovators share insights on how AI-driven experiences are powering the next wave of retail growth.